StyleFaceUV: a 3D Face UV Map Generator for View-Consistent Face Image Synthesis

Wei-Chieh Chung*1 Jian-Kai Zhu*1 I-Chao Shen2 Yu-Ting Wu3 Yung-Yu Chuang1(*: joint first authors)

National Taiwan University1 The University of Tokyo2 National Taipei University3

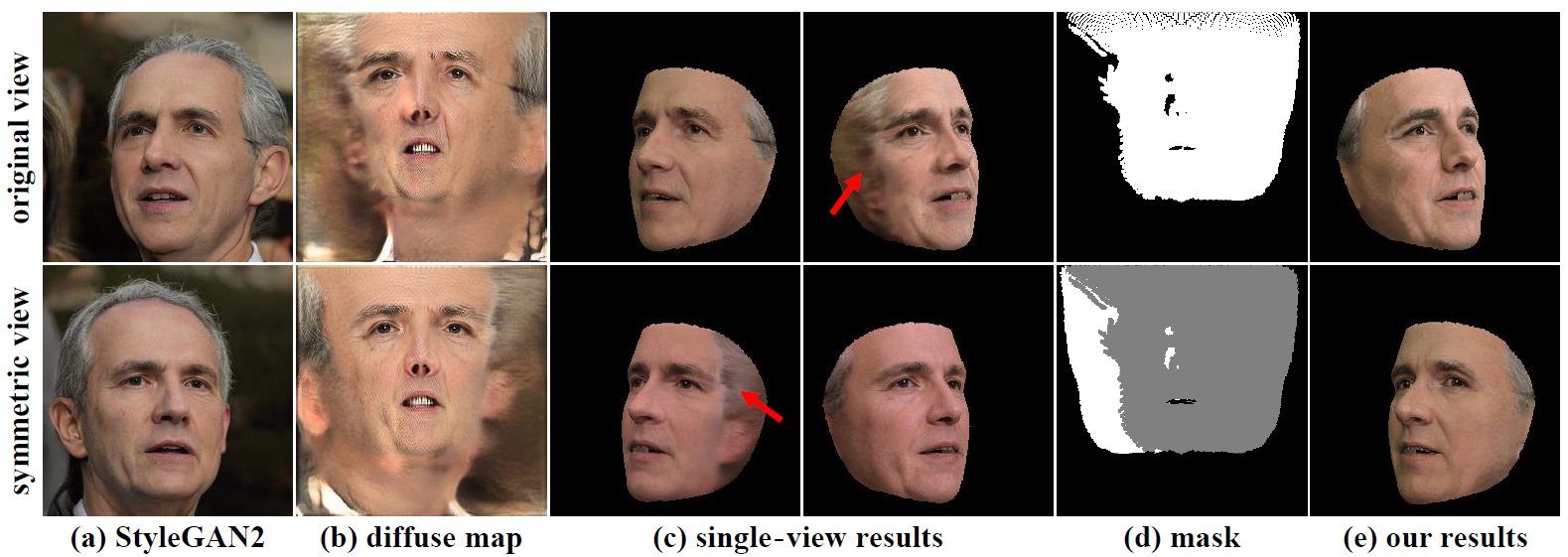

When only one view is used to generate a face model, (c) the result will usually include artifacts. (e) Our method generates a high-quality model of the entire face by integrating of information from the original and symmetric views.

Abstract

Recent deep image generation models, such as StyleGAN2, face challenges to produce high-quality 2D face images with multi-view

consistency. We address this issue by proposing an approach for generating detailed 3D faces using a pre-trained StyleGAN2 model.

Our method estimates the 3D Morphable Model (3DMM) coefficients directly from the StyleGAN2’s stylecode. To add more details to

the produced 3D face models, we train a generator to produce two UV maps: a diffuse map to give the model a more faithful

appearance and a generalized displacement map to add geometric details to the model. To achieve multi-view consistency, we also

add a symmetric view image to recover information regarding the invisible side of a single image. The generated detailed 3D face

models allow for consistent changes in viewing angles, expressions, and lighting conditions. Experimental results indicate that

our method outperforms previous approaches both qualitatively and quantitatively.

Publication

Wei-Chieh Chung*, Jian-Kai Zhu*, I-Chao Shen, Yu-Ting Wu, Yung-Yu Chuang (*: joint first authors)

StyleFaceUV: a 3D Face UV Map Generator for View-Consistent Face Image Synthesis.

Proceedings of British Machine Vision Conference (BMVC) 2022. BibTex

BMVC 2022 Paper (5.6MB PDF)

Digital library

Supplemental

BMVC 2022 supplementary document (6.0MB PDF)

BMVC 2022 poster (1.4MB PDF)