Learning to Cluster for Rendering with Many Lights

Yu-Chen Wang1 Yu-Ting Wu1 Tzu-Mao Li2,3 Yung-Yu Chuang1National Taiwan University1 MIT CSAIL2 University of California San Diego3

All results are generated on a machine with 8-core Intel Core i7-9700 CPU (using 4 cores) and 32GB RAM.

Image viewer is borrowed from this page.

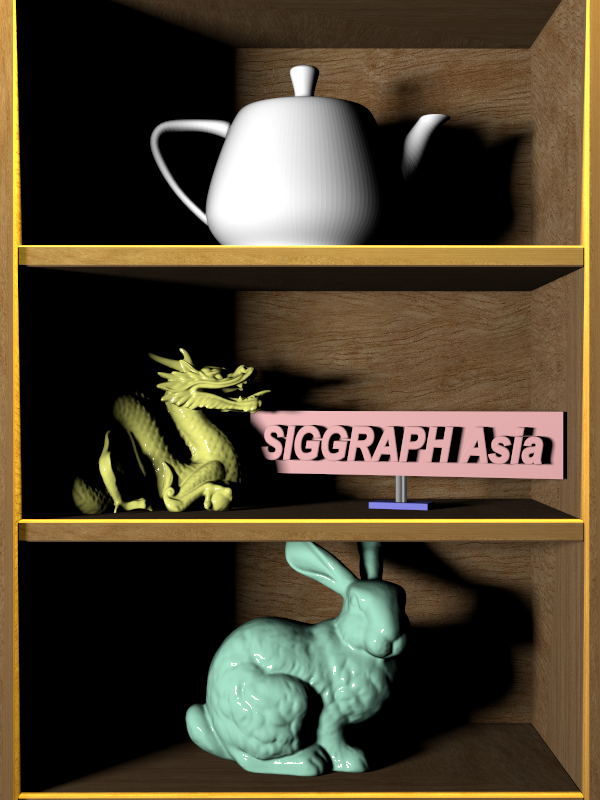

Scene (click on the thumbnail to select the scene comparison shown at the bottom of the page)

|

|

|

|

(120 sec.) |

(120 sec.) |

(120 sec.) |

(120 sec.) |

|

|

|

|

(60 sec.) |

(360 sec.) |

(480 sec.) |

|

|

|

|

|

(30 sec.) |

(30 sec.) |

(60 sec.) |

Please select mode:

Result Comparison Ablation Study

Compared methods:

Stochastic Lightcuts (SLC) [Cem Yuksel 2019]

Resampled Importance Sampling (RIS) [Talbot et al. 2005, Bitterli et al. 2020]

Bayesian Online Regression for Adaptive Direct Illumination Sampling (BORAS) [Vevoda et al. 2018]

Variance-aware BORAS (VA-BORAS) [Rath et al. 2020, Vevoda et al. 2018]

Importance Sampling of Many Lights with Reinforcement Lightcuts Learning (RLL) [Pantaleoni 2019]

Stochastic Lightcuts (SLC) [Cem Yuksel 2019]

Resampled Importance Sampling (RIS) [Talbot et al. 2005, Bitterli et al. 2020]

Bayesian Online Regression for Adaptive Direct Illumination Sampling (BORAS) [Vevoda et al. 2018]

Variance-aware BORAS (VA-BORAS) [Rath et al. 2020, Vevoda et al. 2018]

Importance Sampling of Many Lights with Reinforcement Lightcuts Learning (RLL) [Pantaleoni 2019]