Preserving Photographic Defocus in Stylised Image Synthesis

Hong-Yi Wang Yu-Ting WuNational Taipei University

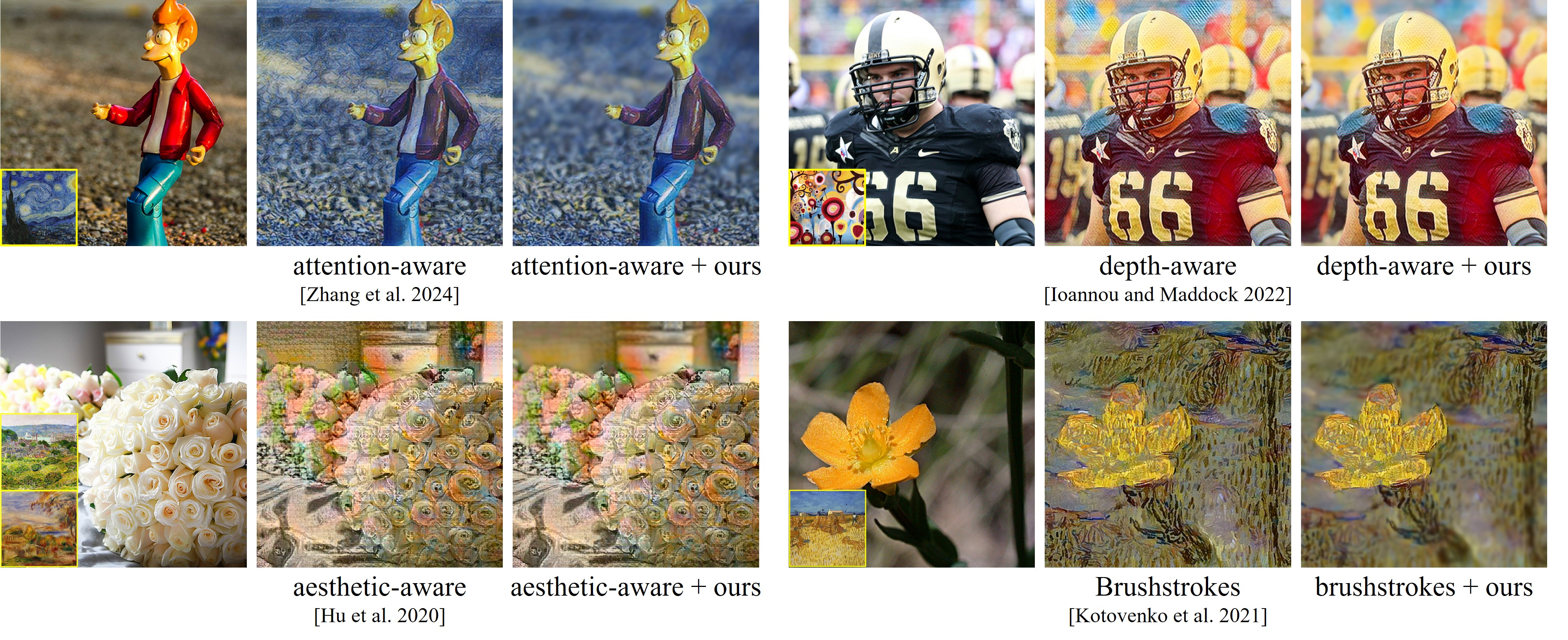

By integrating our method with existing style transfer techniques, including attention-aware [Zhang et al. 2024], depth-aware [Ioannou and Maddock 2022], aesthetic-aware [Hu et al. 2020], and parameterized brushstrokes-based [Kotovenko et al. 2021] methods, we effectively preserve the original defocus effects intended by the photographer. The incorporation of defocus into the stylized outputs further enhances structural coherence, allowing the background to fade naturally and improving visual separation and emphasis on focal regions. For each method, the corresponding content and style images are presented in the first column.

Abstract

While style transfer has been extensively studied, most existing approaches fail to account for the defocus effects inherent in content images, thereby compromising the photographer's intended focus cues. To overcome this shortcoming, we introduce an optimisation-based post-processing framework that restores defocus characteristics to stylised images, regardless of the style transfer technique used. Our method initiates by estimating a blur map through a data-driven model that predicts pixel-level blur magnitudes. This blur map subsequently guides a layer-based defocus rendering framework, which effectively simulates depth-of-field (DoF) effects using a Gaussian filter bank. To map the blur values to appropriate kernel sizes in the filter bank, we introduce a neural network that determines the optimal maximum filter size, ensuring both content integrity and stylistic fidelity. Experimental results, both quantitative and qualitative, show that our method significantly improves stylised images by preserving the original depth cues and defocus details.

Publication

Hong-Yi Wang, Yu-Ting Wu.

Preserving Photographic Defocus in Stylised Image Synthesis.

Computer Graphics Forum, to appear. BibTeX (coming soon)

CGF paper (author version)

Digital library (early access)

Supplemental

Computer Graphics Forum supplementary document

Last Update: June 2025