ScannerNet: A Deep Network for Scanner-Quality Document Images under Complex Illumination

Chih-Jou Hsu1 Yu-Ting Wu2 Ming-Sui Lee1 Yung-Yu Chuang1National Taiwan University1 National Taipei University2

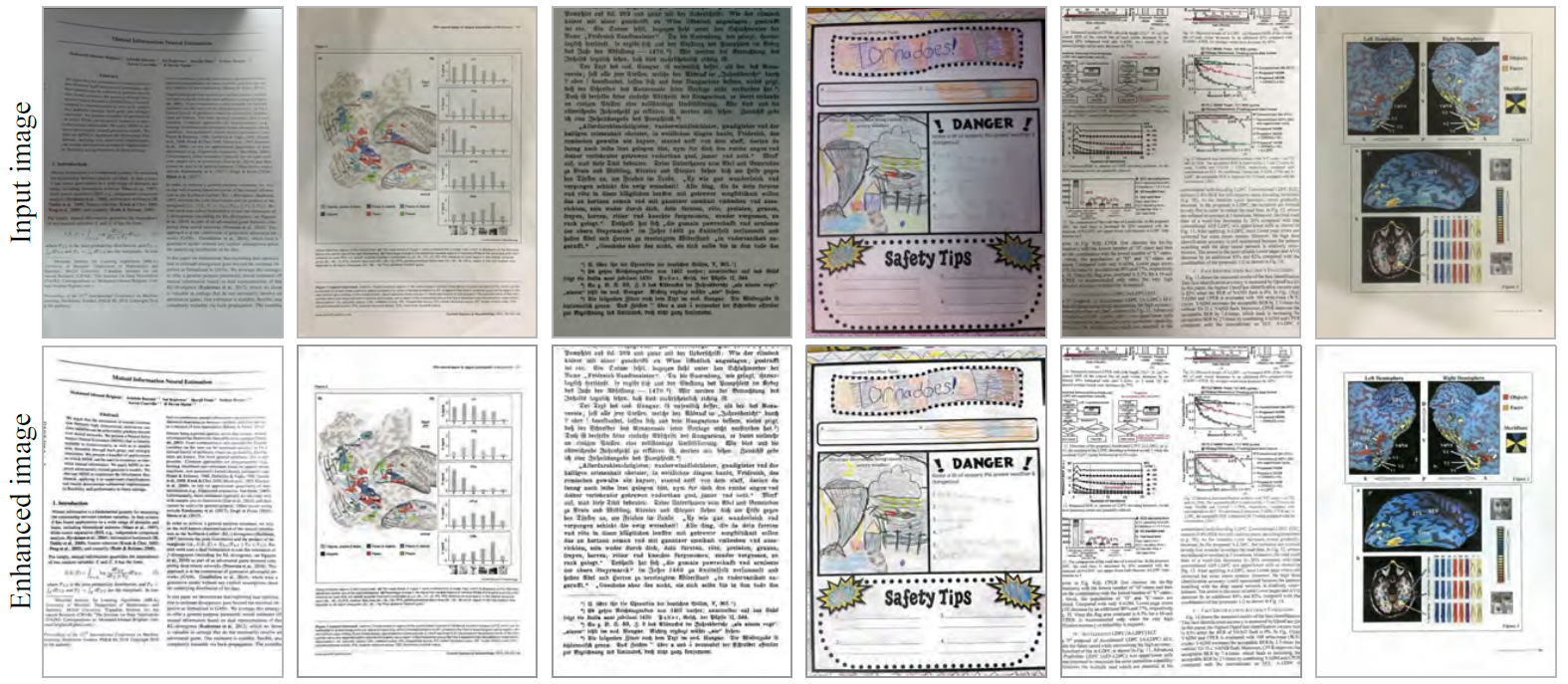

Photometric distortion correction. Our method is effective for correcting complex photometric distortions in document images. The top row shows the images captured by cameras, and the bottom row shows the enhanced images produced using the proposed method, which corrects shadows, shading, and color shift simultaneously.

Abstract

Document images captured by smartphones and digital cameras are often subject to photometric distortions, including shadows,

non-uniform shading, and color shift due to the imperfect white balance of sensors. Readers are confused by an indistinguishable

background and content, which significantly reduces legibility and visual quality. Despite the fact that real photographs often

contain a mixture of these distortions, the majority of existing approaches to document illumination correction concentrate on

only a small subset of these distortions. This paper presents ScannerNet, a comprehensive method that can eliminate complex

photometric distortions using deep learning. In order to exploit the different characteristics of shadow and shading, our model

consists of a sub-network for shadow removal followed by a sub-network for shading correction. To train our model, we also devise

a data synthesis method to efficiently construct a large-scale document dataset with a great deal of variation. Our extensive

experiments demonstrate that our method significantly enhances visual quality by removing shadows and shading, preserving figure

colors, and improving legibility.

Publication

Chih-Jou Hsu, Yu-Ting Wu, Ming-Sui Lee, Yung-Yu Chuang.

ScannerNet: A Deep Network for Scanner-Quality Document Images under Complex Illumination.

Proceedings of British Machine Vision Conference (BMVC) 2022. BibTex

BMVC 2022 Paper (13.6MB PDF)

Digital library

Supplemental

BMVC 2022 supplementary document (45.3MB PDF)

BMVC 2022 poster (2.6MB PDF)